Maybe you already saw it on the news: A new Artificial Intelligence (AI) model called GPT-3 is ready to take over the world. This AI model is so “smart” that it can write articles that look like they were made by humans, answer any question and solve any problem. It will kill millions of Jobs, it can generate source code, so even software developers are threatened now. Some see this advance as the wake of Skynet.

Are we on the verge of a new era? Or this is mostly hype? What is the real reach of the GPT-3 model? I will try to answer these questions.

Let’s start by the beginning, what is GPT-3? GTP-3 stands for Generative Pre-Trained Transformer 3 and it is the third generation of an AI model developed by the OpenAI group. Its main purpose is to predict text given a small clue or prompt. If you tell GPT-3: “Please write a poem”, it will write a poem, if you write the first paragraph of a news article, it will make up the rest. Want to see how a GTP-3 produced text looks like? Take this example:

After two days of intense debate, the United Methodist Church

has agreed to a historic split - one that is expected to end in the

creation of a new denomination, one that will be "theologically and

socially conservative," according to The Washington Post. The majority of

delegates attending the church's annual General Conference in May voted to

strengthen a ban on the ordination of LGBTQ clergy and to write new rules

that will "discipline" clergy who officiate at same-sex weddings. But

those who opposed these measures have a new plan: They say they will form a

separate denomination by 2020, calling their church the Christian Methodist

denomination.

The Post notes that the denomination, which claims 12.5 million members, was

in the early 20th century the "largest Protestant denomination in the U.S.,"

but that it has been shrinking in recent decades. The new split will be the

second in the church's history. The first occurred in 1968, when roughly

10 percent of the denomination left to form the Evangelical United Brethren

Church. The Post notes that the proposed split "comes at a critical time

for the church, which has been losing members for years," which has been

"pushed toward the brink of a schism over the role of LGBTQ people in the

church." Gay marriage is not the only issue that has divided the church. In

2016, the denomination was split over ordination of transgender clergy, with

the North Pacific regional conference voting to ban them from serving as

clergy, and the South Pacific regional conference voting to allow them.

Not so bad. At first sight it is not easy to spot this was made by a bot. In fact only 12% of tested subjects realized that this text was not a human generated article. Saying that GPT-3 just produces text is an understatement, since it is also capable of common sense reasoning by answering questions made in natural language.

Take for example the question “How to apply sealant to wood?”, it can answer “Using a brush, brush on sealant onto wood until it is fully saturated with the sealant”. For doing this, it should understand the question, so it can make an answer out of the training data.

It also can pick up an answer from multiple choice options.

For the question: “Which factor will most likely cause a person to develop a fever?”

and the following tentative answers:

a) a bacterial population in the bloodstream

b) a leg muscle relaxing after exercise

c) several viral particles on the skin

d) carbohydrates being digested in the stomach

It can identify the right answer (a in this case).

You can even provide examples to GPT-3 to teach it new tricks. There is a demo where a developer shows the model a question in plain English and its equivalent in SQL. So you can ask: “How many users has signed up since the start of 2020?”, and the model will respond with the SQL query needed to answer this question:

SELECT count(id) FROM users created_at > '2020-01-01'This translation from “human language” to computer code has been applied to HTML, Python and others. That is why some people are thinking that programmers won’t be needed in the future.

It can extract key points from a text, translate text from other languages to English and even a “legalese” to English translator. These are some of the abilities of this model, most of them are described in the GPT-3 pre-print, although we are expecting more to come.

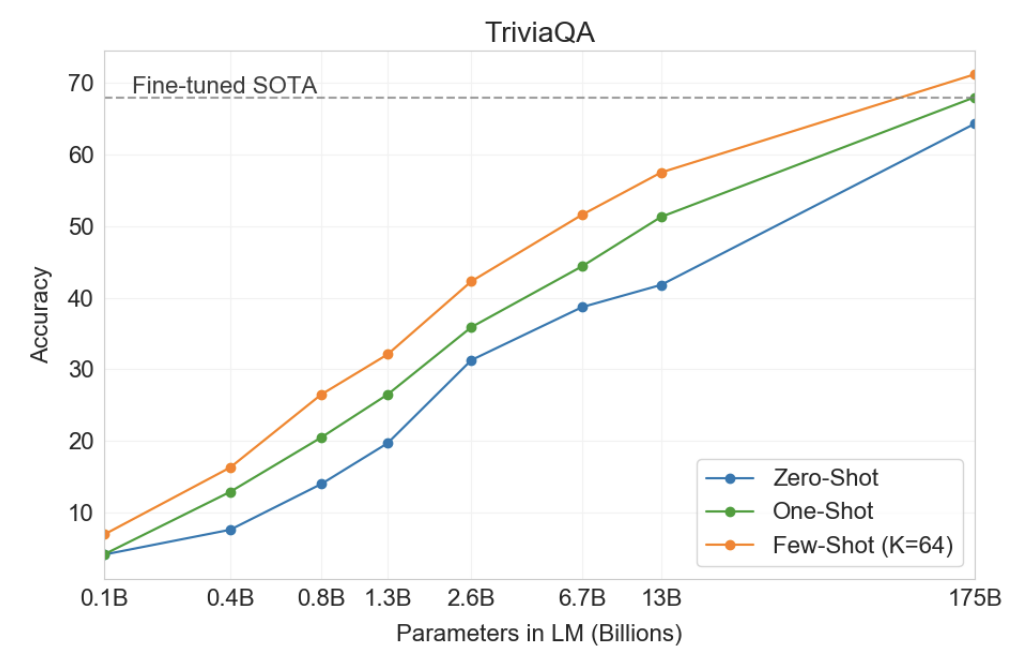

How does the model accomplish all these seemingly diverse tasks? The details of the inner working if out of the scope of this article, suffice to say that the model was trained with 175 billion of parameters out of 45 Tb of compressed text from different sources (news articles, wikipedia articles, recipes, books, poems and more). A dataset of this size is an improvement of about 10 times from previous models. Most of the tasks increased the accuracy in proportion with the size of the training data as can be seen in the following chart:

All these capabilities are not exempt of potential problems. The already mentioned destruction of jobs is just one. This model could be used by nefarious actors to create spam content, to commit frauds and scams such as fake news and fake reviews at scale and other uses that we can’t even imagine right now. The authors are not naïve and recognize that this technology can be abused. Another drawback of this AI is the energy footprint. The training of the model always consumes more energy than the inference process, but the training is done once while the inference is made multiple times per second. According to OpenAI, the model consumes around 0.4 Kw/h for 100 pages of text produced.

We should also mention the possibility of bias and racism enabled by the AI. They tested that the text generated related to gender, race and religion were indeed biased toward women, black people and Islamism. The AI reproduces the bias it finds in the text corpus it uses for training.

According to the new capabilities that GPT-3 brings to the table, is the hype around this new model justified? Demos tend to show what works and hides when it misses. When a generated text is long enough, it starts to lose coherence and incurs in contradictions and other logic fallacies. The paper makes extensive comparisons with other models. When compared each given task of GPT-3 against task specialized IA’s, the result is not so clear. In most of the individual comparisons, the new model is sometimes behind and sometimes about the same performance, in a few cases, GPT-3 outperforms the competition. If most of the time it is weaker or about the same as the competition, why the hype? GPT-3 is the only model that can tackle so many problems with only one approach, and this is indeed impressive.

This is a promising new technology, it’s too early to tell if it will be the next big thing or just a passing fad. Most likely the big cloud providers (AWS, Azure, GCP and IBM) will set up a service like this. The CPU and GPU cost to train GPT-3 is estimated to be around 5 to $10M, so it is not for everybody to enter into this game. We are a killing app away from making this a successful enterprise and one screw up like Microsoft “Tay” chatbot to trigger legislation against it. Meanwhile my advice to software developers is to request a place in the OpenAI waitlist to be ready to try this technology as soon as it is available.